Securing Docker on a VPS under Ubuntu using the Host-Based Firewall

For anyone interested in using Docker, the first question that should be asked is how are you going to secure it? Is it locally hosted? Is the host behind a network firewall? Is it hosted on a VPS? All of these things should be considered, as depending on the situation you may or may not be leaving your server exposed to attack. Mitigating these vulnerabilities should be your first step prior to putting the server into production, as a server exposed to the internet may be vulnerable to exploits and attacks. In this blog post, I will give you my first hand account into securing Docker on Ubuntu, hosted on a VPS.

Is your VPS Exposed?

I self-host all of my web services (other than email) and everything is behind a network firewall. This firewall is your typical firewall provided by a router, and with port-forwarding, I specifically expose the ports and hosts I require to have access to the web. With this strategy, I have a firewall protecting my network from the internet.

A VPS isn't necessarily going to work the same way. If you have a VPS hosted at a data-center, they can assign a public IP address to your host. This means that the host is open to the web, similar to plugging in your home PC into your home modem. The public IP address assigned to the server allows the internet to attempt to connect directly to that server. That means it can be probed, scanned for vulnerabilities, and be vulnerable to attack.

I am not familiar with purchasing a server from a VPS provider, so I was surprised to hear that my colleague's Ubuntu server did not have any sort of network firewall protecting it. At that point, your only defensive option is a Host-Based Firewall.

Understanding your Attack Surface

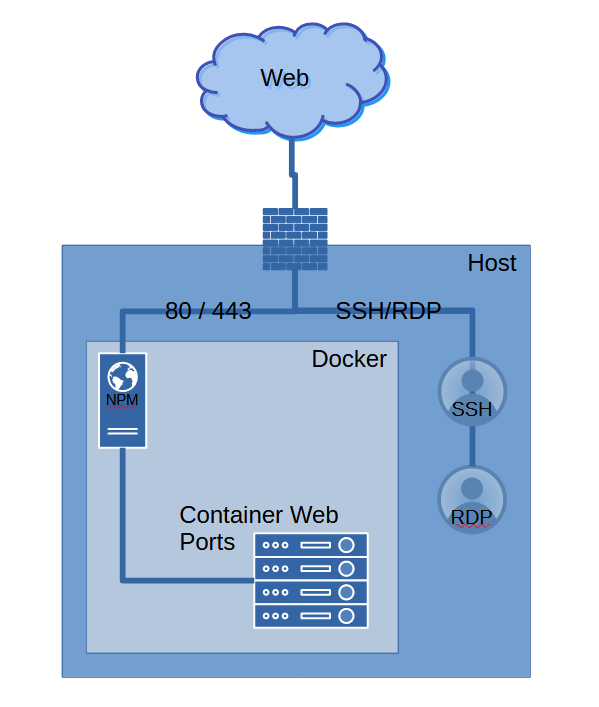

After being contacted by a colleague to help him secure his VPS, I started looking at our Attack Surface. Being completely exposed to the web with a public IP address, the server was open for any sort of network attack via open service ports on the device. Things like SSH and RDP were open, and any additional ports that Docker would listen on would be exposed. An attacker interested in the server could perform an NMAP scan to find all the listening ports on the device. With that information, they could perform any sort of attacks they want.

With the understanding that the host has no network firewall to prevent attacks over the internet, it is up to an administrator to find an alternate solution. A Host-Based Firewall is the ideal choice in this case. Using a Host-Based Firewall, we can explicitly allow the ports and services we wish to allow access to from the internet.

Securing the Docker Host is extremely important due to the large number of potential web services which could be expose to attack. On my personal Docker Instances specifically, I have a lot of potential services which could be attacked. My primary server hosts 77 containers. Not every container has services exposed to the web, as many of them have their own Virtual Docker Networks that they use to communicate between containers in a "stack"- however, there are some Docker Networks that communicate to other Docker Networks via the Host.

Understanding how Containers Communicate

Docker Containers can get messy, and I'm unsure what the best practice is regarding multiple databases on a single host- but one large example of a dilemma that an administrator will often face would be related to how many containers use a database to store information. Example databases include MariaDB, PostgreSQL, or MySQL.

If you install something like WordPress, the Stack may include a new MySQL database. If you have 3 WordPress websites you deploy containers for, you can either deploy new databases for each WordPress instance, or use a single database for all instances.

It is extremely tempting to use a single Database Container for multiple hosts. However, now you run into a networking problem. Normally, a stack comes with its own isolated network which allows a database to only be exposed to the container that needs it. Creating a database which needs to communicate with a lot of containers means potentially exposing the database's listening port to the host, and without a network or host-based firewall, you are also exposing to the internet. This is of critical concern, as any public facing databases are a huge target for a potential attacker. If you have a perimeter Firewall, you can mitigate the risks involved. If you don't, than consider what ports are exposed to the host and the internet when deploying new containers.

Using a Host-Based Firewall

Knowing that a Firewall is needed, the only option is to use a Host-Based Firewall on Ubuntu. Ubuntu does come with a firewall solution (UFW) which may be disabled by default. Enabling it is very easy if you reference the official documentation.

However, you're going to run into a snag if you're using Docker with UFW. By default, installing UFW only protects the Host OS and not ports exposed by Docker. I'm not versed enough to give you the details, but this excellent GitHub link provides a more in-depth explanation as to what is going on.

Included within the GitHub link is a potential solution. By modifying how you approach using UFW using the techniques provided, you can effectively put Docker behind the Firewall. This is fantastic, but I myself haven't made it work just yet. I intend to go back make UFW work with Docker, but in the meantime, I am careful about putting databases inside of Docker Networks with containers that require access to the database. This way, I am not exposing the databases to the web in any way.

Be aware of exposed Ports

If you don't put a Firewall in front of Docker, you should be hyper-aware of what ports are being exposed and what services go along with them. Otherwise, you're only increasing your potential attack surface. Web Servers and their databases are particularly at risk.

Always put a Firewall in front of Docker if possible and follow Docker best practices. Exposing a Docker Host to the Internet without a Firewall can be risky if you're not aware of what ports you are publishing. Consider only allowing port 80 and 443, using NGINX Proxy Manager to manage your Reverse Proxy. NGINX Proxy Manager can also block common exploits which is a handy feature.

Wrapping Up

You may think you're not a large enough target to be at risk of attack, but with how easy it is to scan websites for open ports via scanning software like NMAP, you're best bet is to play it safe. Use a Host-Based Firewall for your VPS, and be aware of what's available to the public. My personal websites have been compromised previously, and I haven't forgotten how surprised I was. As someone who self-hosts, I have no-one to outsource my Security to. It's my responsibility to maintain the security of my systems. For someone that maintains their own stuff, it's key to play it safe.

For my colleague, we have developed best practices to prevent unnessessary exposure for ports, protocols, and services. We also employ the UFW. While it's not perfect yet, it's a large step in keeping things from being completely open.